CDN (How Caching Goes Global — and Why Geography Matters)

A first-principles explanation of CDNs, how caching moves closer to users, and why distance and geography still matter on the internet.

Why Distance Still Matters on the Internet

You open a website hosted on the other side of the world.

Surprisingly, it loads fast.

Images appear instantly.

Pages feel snappy.

But the server isn’t close to you.

So what’s actually happening?

The answer is simple:

The data didn’t travel far at all.

The Core Idea (Plain and Honest)

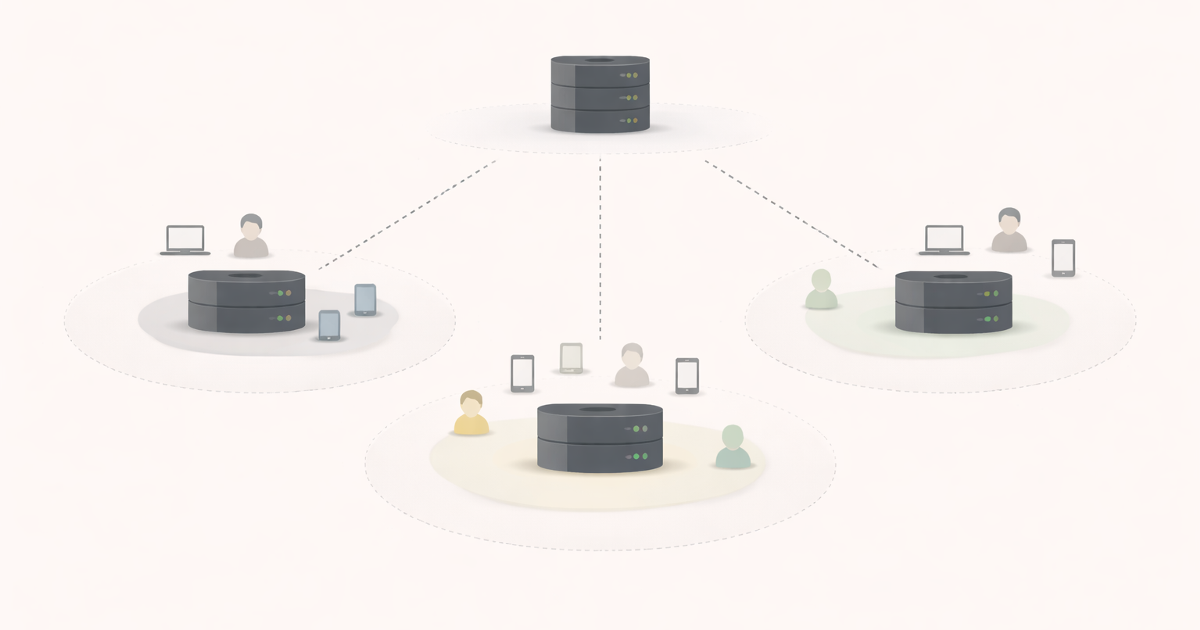

A Content Delivery Network (CDN) is just caching —

but placed closer to users.

Instead of every request going to one central server, responses are stored at many locations around the world.

When you make a request:

- it’s answered by the nearest copy

- not the original source

That’s it.

No magic.

Just proximity.

A Simple Story: Traffic Signals Across Cities

Traffic signals across cities follow the same rules.

But they aren’t controlled from one central room.

Each city runs its own system.

When rules change, updates don’t reach every city instantly. Some intersections follow the new rule first. Others catch up shortly after.

For a brief time, the system isn’t perfectly synchronized — yet traffic still flows.

That’s exactly how a CDN works.

Content is copied closer to users. Requests are handled locally. Updates propagate gradually.

Speed improves.

Coordination loosens.

What CDNs Usually Cache

CDNs work best with data that:

- is requested repeatedly

- changes infrequently

- is read far more than written

Common examples:

- images and videos

- JavaScript and CSS files

- static pages

- sometimes API responses

The more read-heavy something is, the better it fits a CDN.

Why CDNs Improve More Than Speed

Yes, CDNs reduce latency.

But they also:

- absorb traffic spikes

- reduce load on origin servers

- improve reliability during partial outages

When the origin struggles, cached copies often keep serving users.

CDNs quietly act as shock absorbers.

How This Relates to Consistency

Here’s the trade-off CDNs introduce:

If data is cached all over the world, updates don’t appear everywhere instantly.

Some users see the new version. Others see the old one — briefly.

That’s eventual consistency, at a global scale.

CDNs don’t cause inconsistency. They make an existing trade-off visible.

⚠️ Common Trap

Trap: Treating CDNs as “just static asset hosting.”

Modern CDNs often handle:

- API caching

- TLS termination

- traffic filtering

- retries and failover

Ignoring this leads teams to rebuild features CDNs already provide.

A Situation You’ve Likely Seen

Have you noticed:

- website updates appearing region by region?

- images staying old after a deploy?

- users reporting outdated content briefly?

That’s CDN caching in action.

Not a bug.

A propagation delay.

How This Connects to the Series So Far

Caching

CDNs push cached data closer to users.

https://vivekmolkar.com/posts/caching/Cache Invalidation

Global caches make forgetting slower and harder.

https://vivekmolkar.com/posts/cache-invalidation/Consistency Models

CDNs operate under eventual consistency by design.

https://vivekmolkar.com/posts/consistency-models/

This is where performance, memory, and agreement collide.

CDNs don’t make data faster.

They make it closer.

🧪 Mini Exercise

Think about your product.

- What data is read far more than written?

- What can tolerate being stale for seconds or minutes?

- What must never be served from a cache?

Those answers define your CDN strategy — even if you never explicitly designed one.

What Comes Next

If data is served from many places…

How do we decide where the real source of truth lives?

Next: Databases vs Caches

Why some systems remember — and others decide.